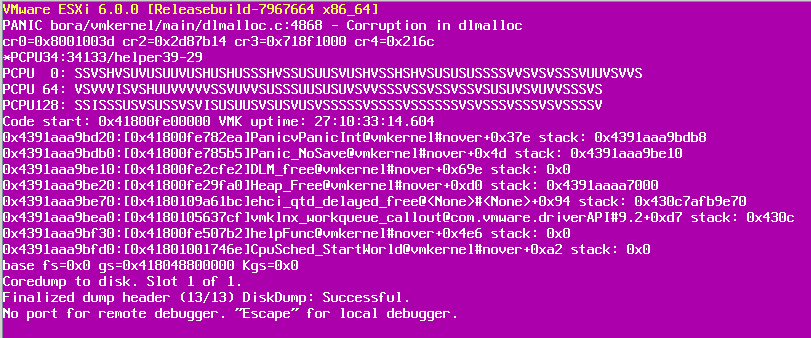

New HPE Customized ESXi Image Not Supported on ProLiant BL c-Class Servers

HPE has released the supported ESXi versions as customized images for ProLiant and other HPE server products. There is bad news about HPE ProLiant BL460c. Which Version Have Released? The below versions of HPE Custom Images for VMware Released in January 2021: VMware-ESXi-7.0.1-17325551-HPE-701.0.0.10.6.3.9-Jan2021.iso VMware-ESXi-6.7.0-17167734-HPE-Gen9plus-670.U3.10.6.3.8-Jan2021.iso VMware-ESXi-6.5.0-Update3-17097218-HPE-Gen9plus-650.U3.10.6.3.8-Dec2020.iso Which Hardware Products Not Supported? The mentioned images don’t support installing on the below server products: HPE ProLiant BL460c Gen10 Server Blade HPE ProLiant BL460c Gen9 Server Blade HPE ProLiant BL660c Gen9 Server Blade Which Versions Are Supported on ProLiant BL c-Class Servers? The below versions must be used on ProLiant BL c-Class Servers: VMware-ESXi-7.0.1-16850804-HPE-701.0.0.10.6.0.40-Oct2020.iso VMware-ESXi-6.7.0-Update3-16713306-HPE-Gen9plus-670.U3.10.6.0.83-Oct2020.iso VMware-ESXi-6.5.0-Update3-16389870-HPE-Gen9plus-650.U3.10.6.0.86-Oct2020.iso What Should We Do For Future? Don’t worry, the next releases will be supported on ProLiant BL c-Class Servers. Wait for new releases of HPE Custom Images for VMware. See Also Network Connection Problem on HPE FlexFabric 650 (FLB/M) Adapter References Notice: HPE c-Class BladeSystem – The HPE Custom Images for VMware Released in January 2021 Are Not Supported on ProLiant BL c-Class Servers