I/O Block Size (> 3 MB) Best Practice on EMC AFA/HFA and Linux (Physical and Virtual)

We have a problem on our Oracle Database servers which are hosting by Oracle Linux servers, when Oracle Databases putting to Maximum Availability protection mode, some workloads impacts performance whole database ecosystem. The workloads are related to some tasks which performed from by application servers and the tasks needs huge read/write I/O on storage arrays. We are using Unity/Unity XT (All Flash/Hybrid Flash) family in most of projects. I did lots of searches and found nothing at first step. I had only one clue, I/O block size was very large during performing tasks by database servers.

What’s Our Story?

The problem was happening at every night. Servers were health, storage arrays were health and there was no problem in hardware layer but storage arrays were reporting latency on LUNs (Even all-flash LUNs).

At first step, I was going to check performance metrics on our Unity storage arrays. All metrics which related to storage hardware were fine but I guessed that there is an issue on storage processor cache.

At first step, I started a discussion on community:

EMC Unity: Cache Read Hit – LUN Latency

Then I found nothing and started another discussion on community:

High Read/Write Cache Miss Hit on All Flash Array

I got actually nothing after search and read many documents about caching on Unity.

The Clue!

I have mentioned that the workload generating huge I/O block size on storage and it seems, storage array (All Flash and Hybrid) couldn’t handle the large I/O block size. The block size was more than 3 MB in some cases. I realized that when I/O block size was smaller, IOPS was generating more than larger block size but latency was lower.

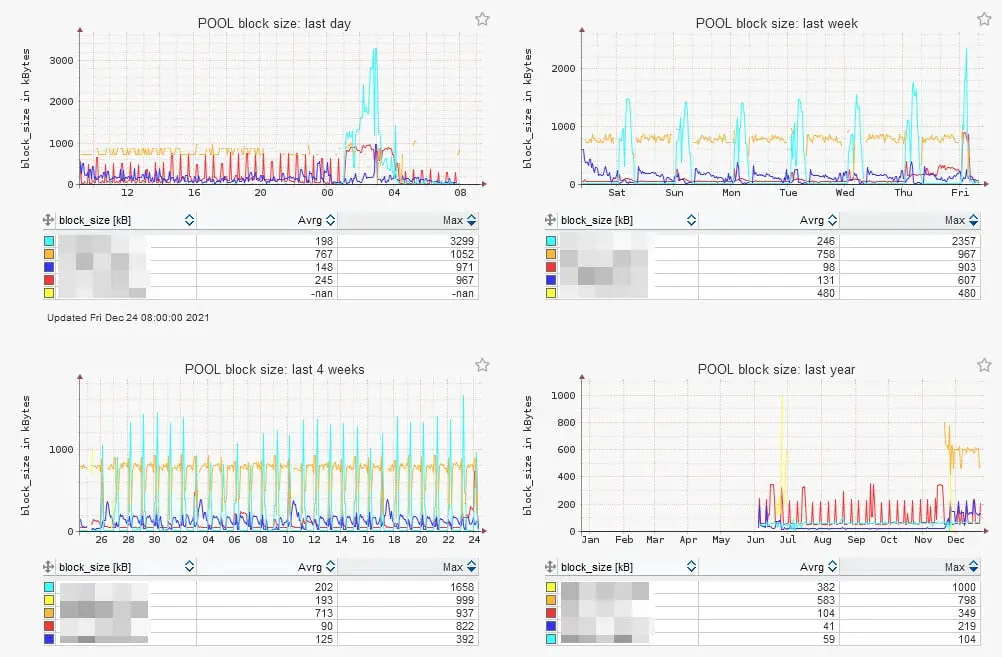

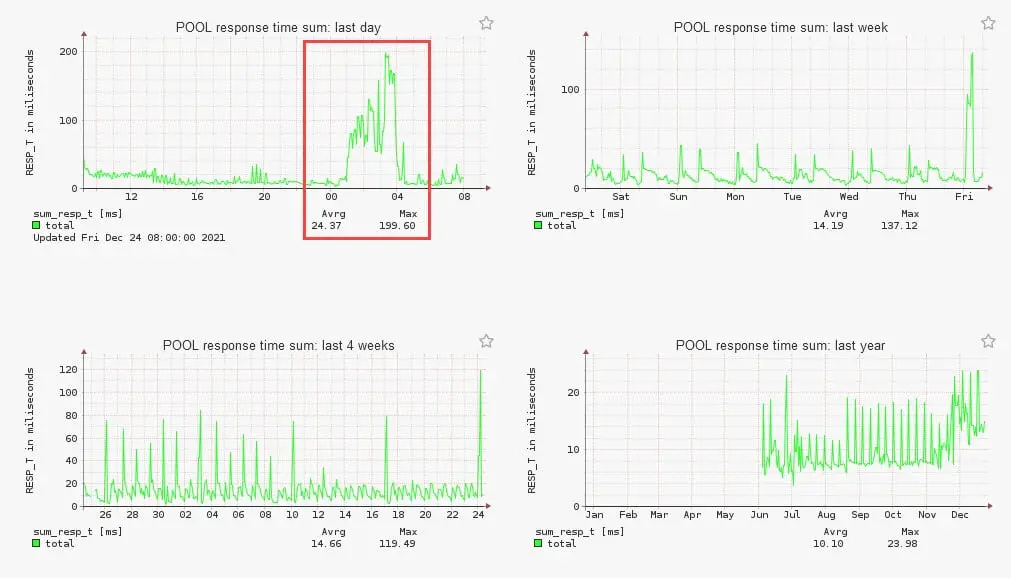

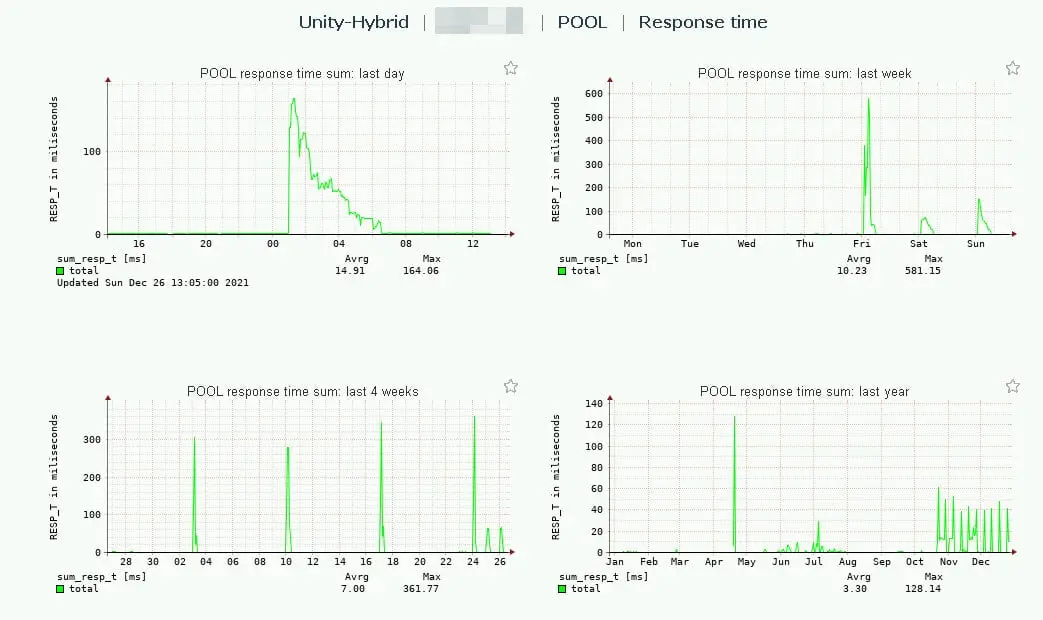

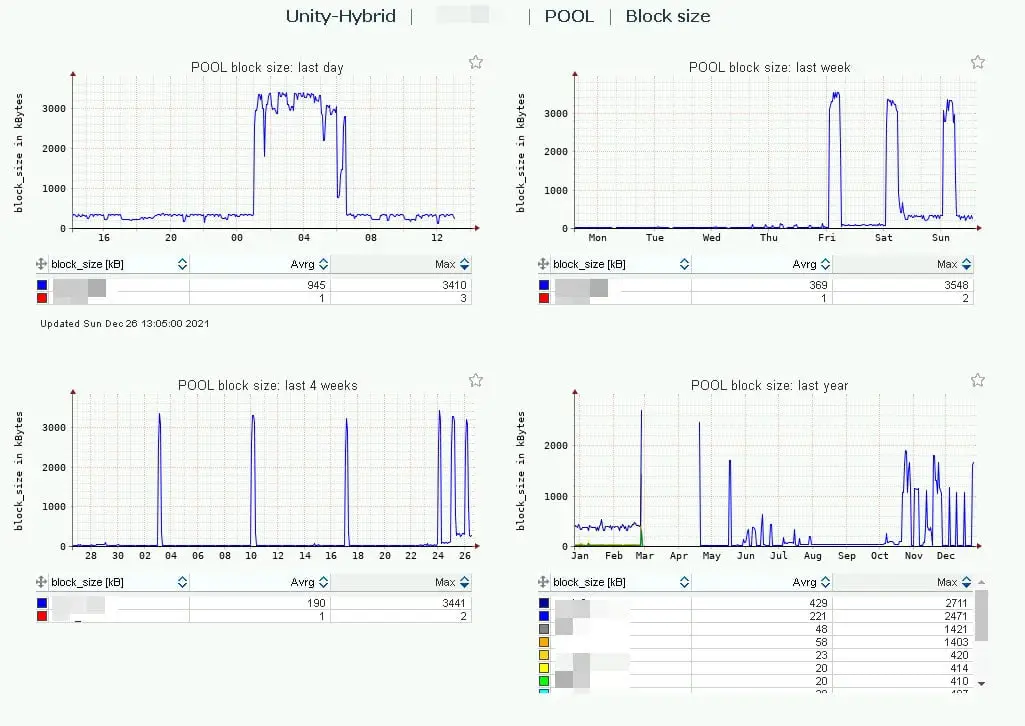

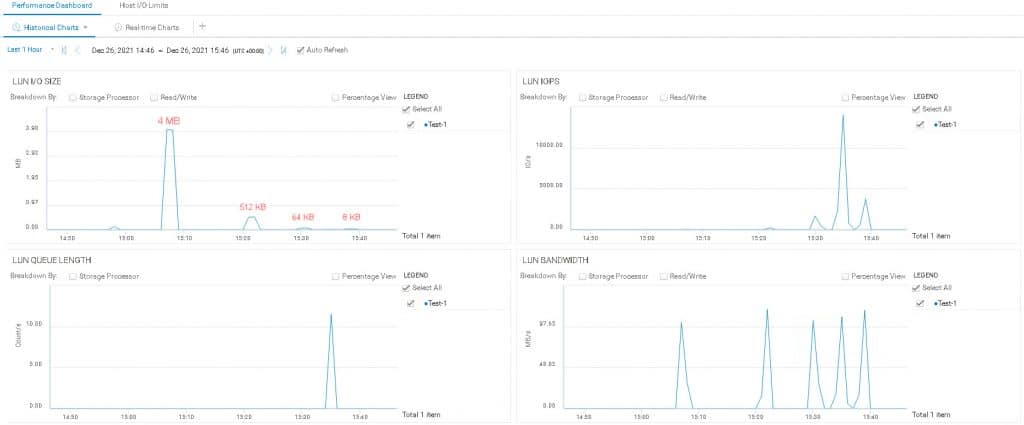

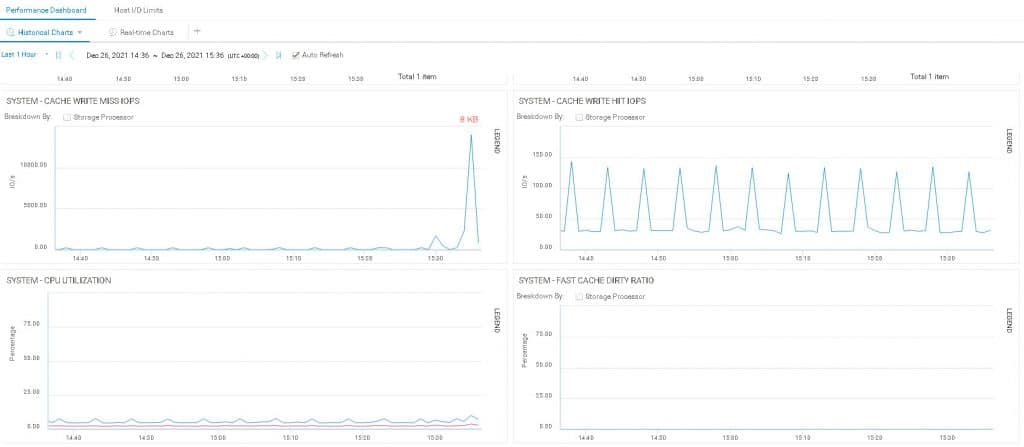

The screenshots 1 and 2, showing how does large block size affect on performance and other screenshots showing more details about IOPS and bandwidth as well. The screenshots are related two storage arrays, first storage array is AFA and second is hybrid but both are using flash disks.

The Suspects!

Who generating I/O? Yes database server and our first suspicious was Oracle Database!

Actually, Oracle Database has own file system and Oracle Database can create disk with specific IO size like other than file systems. Usually, it’s recommended that create small IO size for online transaction processing, so did they anything wrong?

It seems not because if Oracle Database block size was very large, the issue must happening in most of cases!

I found this parameter on this regard: db_file_multiblock_read_count

I started another discussion on EMC forum:

Oracle Database Recommended I/O Size on EMC Unity

Same as before, I got nothing!

Second suspicious was operating system, Linux is suspicious in this case. I’ve read all related documents about Unity and Linux.

But there was no best practice for I/O size or anything like this for Unity.

What’s “max_sectors_kb” in Linux?

I have mentioned that there was no information about Unity, but EMC had some recommendations about other products such as PowerMax, PowerStore and others.

EMC had pointed out about “max_sectors_kb” in different Linux kernels and it seems that we found our the main defendant.

Vendors Quotes

Here is the description about “max_sectors_kb” that Red Hat published in an article:

This is the maximum number of kilobytes that the block layer will allow for a filesystem request. This value could be overwritten, but it must be smaller than or equal to the maximum size allowed by the hardware.

Another from Red Hat:

Specifies the maximum size of an I/O request in kilobytes. The default value is

512KB. The minimum value for this parameter is determined by the logical block size of the storage device. The maximum value for this parameter is determined by the value ofmax_hw_sectors_kb.Certain solid-state disks perform poorly when the I/O requests are larger than the internal erase block size.To determine if this is the case of the solid-state disk model attached to the system, check with the hardware vendor, and follow their recommendations. Red Hat recommends

max_sectors_kbto always be a multiple of the optimal I/O size and the internal erase block size. Use a value oflogical_block_sizefor either parameter if they are zero or not specified by the storage device.

Also, I found an old document about another parameter like that on HPE. The document was related to EVA. HPE has mentioned that:

Large IO size can overload the Storage internals.

Test Results!

First, we have Oracle Linux on our database server and I have to mentioned that “max_sectors_kb” value is 4096 which is equal to 4 MB.

I prepared a LUN on storage array with flash disks for these tests. Let’s see the results.

First Test

As the first test, I’ve created a file on the partition by “dd” command. The value of “bs” is 8 MB for all tests.

The “dd” command: dd if=/dev/urandom of=/mountpoint/file.txt bs=8M count=1000

The result was interesting because Kernel doesn’t allow issue IO size larger than 4 MB.

There was high latency and response time (More than 250ms). But IOPS was just 40 IOPS. Bandwidth was 166 MB/s.

Second Test

I had change “max_sectors_kb” on disk (Not partition) by using the below command:

echo “512” > /sys/block/dm-x/queue/max_sectors_kb

The command changing “max_sectors_kb” value for a specific device until next rescan or reboot.

Then, I ran the “dd” command again.

IOPS was more than 1000 and latency reduced under 50ms. Bandwidth was same as before on both storage and operating system.

Third Test

I changed the value to 64. One-eighth of the previous value.

IOPS was increased to more than 2K. Latency was reduced lower than 20ms. Bandwidth was same as before again.

Fourth Test

I changed the value to 8. One-eighth of the previous value.

IOPS was increased to more than 10K. Latency was reduced lower than 3ms. Bandwidth was same as before again.

But there was some LUN Queue Length and Cache Write Miss IOPS was too high on Unity monitoring, compare to previous tests.

Fifth Test

Value changed to 32.

IOPS reduced to to 4K. But latency and response time was same as the pervious test with smaller IO size.

Tests Conclusion

The tests results shows that lower value of “max_sectors_kb” will reduce response time and latency.

But IOPS will be increased and smaller values will affect on system cache or processing IO.

Recommendation For Production Environment

Now, what should I for our production environment?

I think, 64 KB or value smaller than 512 KB are good for performance but if you want to change the value, some tests are required.

I applied the value on device temporary during my tests but if you want to change the value permanently, there is some way to achieve it:

- DM-Multipath Configuration

- UDEV

This issue is happening on both virtual and physical environments. So applying best practices on virtual server is also recommended.

Conclusion

Because large block size can affect whole storage array performance even All-Flash arrays. EMC should publish the configuration for Unity and also recommend values for different workloads.

The configurations and recommendations can be added to Connectivity Host Guide or another document about best practices for Unity.

Further Reading

Veeam BR – Backup Mode I/O Consideration(Opens in a new browser tab)

[How To]: Configure HPE iLO via ESXi(Opens in a new browser tab)

Oracle Database CPU Core Limit For Dummies(Opens in a new browser tab)

ESXi 5.5, 6.x IOPS Limit Not Working – Disk.SchedulerWithReservation(Opens in a new browser tab)

External Links

How to set custom ‘max_sectors_kb’ option for devices under multipathd control?

What is the kernel parameters related to maximum size of physical I/O requests?

Disk rescan will change max_sectors_kb to default value

Operating system configuration and tuning

MULTIPATHS DEVICE CONFIGURATION ATTRIBUTES

HP EVA Storage – Setting Max IO Transfer Size

Default Maximum IO Size Change in Linux Kernel

[Engineering Notes] I/O Limits: block sizes, alignment and I/O hints

Performance Tuning on Linux — Disk I/O

STORAGE I/O ALIGNMENT AND SIZE

Increasing the maximum I/O size in Linux | Martins Blog (wordpress.com)

Dell EMC Host Connectivity Guide for Linux (delltechnologies.com)

Oracle Database – How To Unset DB_FILE_MULTIBLOCK_READ_COUNT to Default Value (thegeeksearch.com)