Guest Connected vs RAW Device Mapping (RDM)

What’s RAW Device Mapping?

RAW Device Mapping (RDM) is one of oldest VMware vSphere features which introduced to resolving some limitation on virtualized environments such as virtual disks size limitation and deploying services top of fail-over clustering services.

You can use a raw device mapping (RDM) to store virtual machine data directly on a SAN LUN, instead of storing it in a virtual disk file. You can add an RDM disk to an existing virtual machine, or you can add the disk when you customize the virtual machine hardware during the virtual machine creation process.

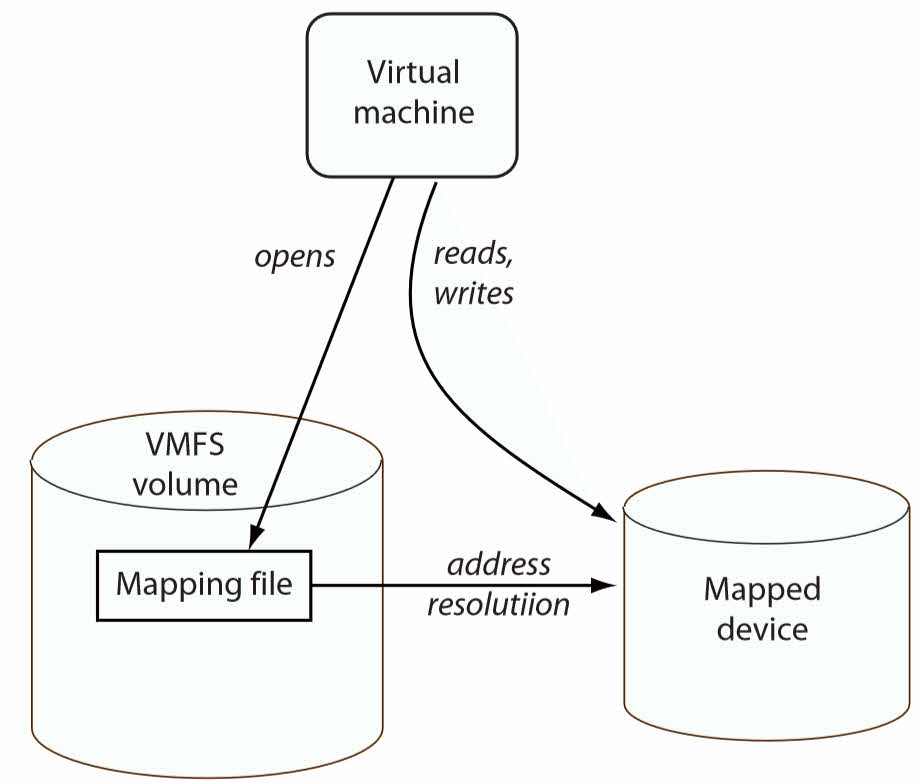

When you give a virtual machine direct access to an RDM disk, you create a mapping file that resides on a VMFS datastore and points to the LUN. Although the mapping file has the same .vmdk extension as a regular virtual disk file, the mapping file contains only mapping information. The virtual disk data is stored directly on the LUN.

During virtual machine creation, a hard disk and a SCSI or SATA controller are added to the virtual machine by default, based on the guest operating system that you select. If this disk does not meet your needs, you can remove it and add an RDM disk at the end of the creation process.

RDM has two different compatibility mode which will use for different scenarios:

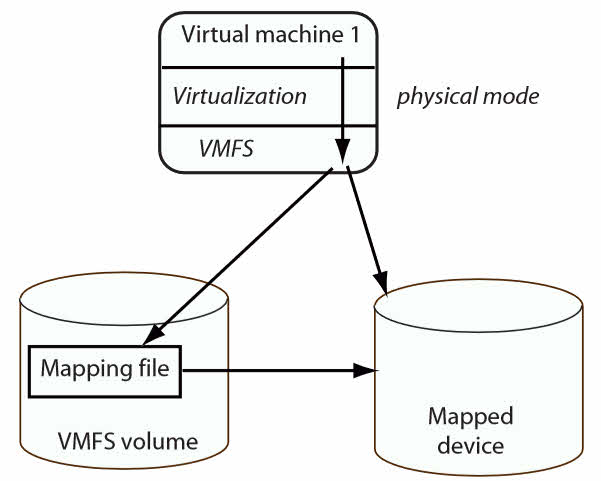

| Physical | Allows the guest operating system to access the hardware directly. Physical compatibility is useful if you are using SAN-aware applications on the virtual machine. However, a virtual machine with a physical compatibility RDM cannot be cloned, made into a template, or migrated if the migration involves copying the disk. |

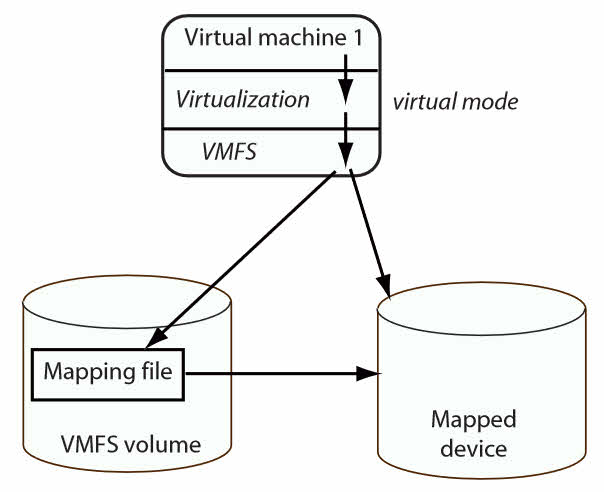

| Virtual | Allows the RDM to behave as if it were a virtual disk, so that you can use such features as taking snapshots, cloning, and so on. When you clone the disk or make a template out of it, the contents of the LUN are copied into a .vmdk virtual disk file. When you migrate a virtual compatibility mode RDM, you can migrate the mapping file or copy the contents of the LUN into a virtual disk. |

What’s Guest Connected or Direct Attached?

When a virtual disk has been assigned to a virtual machine, guest OS looking that like a local disk even the virtual disk located on enterprise SAN storage. Regular virtual disk has some limitations and there is no standard way to use them as shared storage between virtual machines without any impact on performance or add limit to configuration or lose some features.

Previously, when you asked a vSphere administrator to provides virtual machines for implementing clustering features, they provides a list of limitations and then provides virtual machines. Because RDM and VMDK sharing were choices for implementing clustering in vSphere environments. Not far past, virtual machines didn’t support large disks and RDM was single choice to resolving the issue. But now and especially on vSphere 6.7, VVol support enables up to 5 WSFC cluster nodes to access the same shared VVol disk.

All above solutions needs to acting Hypervisor on I/O and need some configuration on virtual machine that some of the configuration may need power off virtual machine. Those actually are not Guest Connected or Direct Attached.

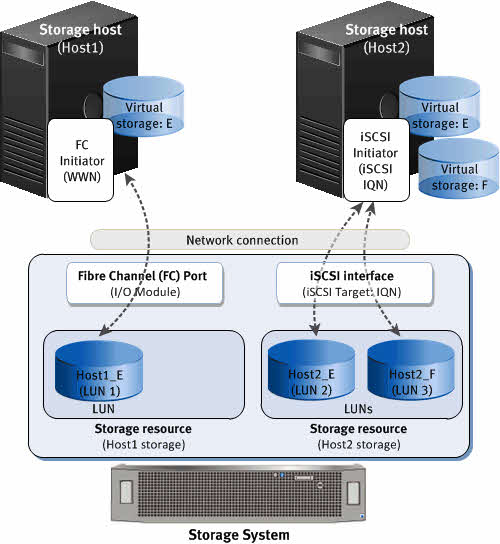

What a about simple and cheaper solutions for attaching shared disk space as shared storage directly to guest OS and not Hypervisor or virtual machine. iSCSI is a one of best choices which has good performance and lower price compare to Fiber Channel. Because there is no native solution on vSphere yet to attaching SAN LUNs to a virtual machine directly. NPIV is still needs RDM disks, both SR-IOV and DirectPath I/O needs reserving all memory.

Most of guest OSes supporting iSCSI instinctively and preparing iSCSI initiator is not complicated. In case of implementing fail-over clustering service, the needed LUNs are available for presenting to each node of cluster from storage array.

Comparing iSCSI vs RDM (Virtual – Physical)

Comparing between iSCSI LUN (Guest Connected) RDM (Virtual – Physical) help to choosing best for virtual environment, the below comparison is based on vSphere features compatibility with RDM disks:

| RDM (Virtual Compatibility) | RDM (Physical Compatibility) | iSCSI | |

| Fault Tolerance | Supported | Not Supported | Absolutely Supported. |

| VM Snapshot | Supported | Not Supported | It’s possible but there is no need to backup iSCSI shared storage via backup software. |

| VM Clone | Supported, but RDM disk will be converted to VMDK | Not Supported | You can clone virtual machine without iSCSI disks, Positive Point! |

| vMotion | *Supported | *Supported | Supported |

| Storage vMotion | Supported | Supported | Supported |

| RDM To VMDK | Supported via Storage Migration | Partially Supported, Should be changed to Virtual DM first. | Really! You need it!? |

| VMKernel Handle | Mapping like VMDK to guest | VMKernel passes all SCSI commands with one exception, the LUN isolated to owning virtual machine | Doesn’t Matter!

iSCSI traffic passes like all other network traffic |

| Hardware Characteristics | Hidden | Exposed to VM | Same as presenting LUN to a physical machine |

| File Locking | Advanced File Locking | N/A | Handled by guest |

| Cluster Type | Virtual to virtual | Physical to virtual | Any type to any type |

| Size | Greater than 2 TB | Greater than 2 TB | As large as guest supporting |

* It typically will cause a timeout and/or fail-over of WSFC.

See Also

[Review]: What’s Remote Direct Memory Access(RDMA)?

[Review]: Undocumented VMware VMX Configuration Parameters

VMware Tools Client – Interact with a VM without Network Connectivity