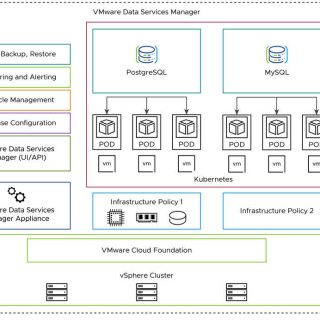

Data is the foundation of any organization in the digitally first world of today. Effective database management is essential, but it presents difficulties with scalability, maintenance, security, and cross-environment compatibility. A potent tool for streamlining and simplifying database administration in contemporary infrastructures is VMware Data Services Manager (DSM).

In-depth discussions of VMware Data Services Manager’s features supported solutions, deployment strategies, advantages, and use cases are provided in this blog. To help you make wise decisions regarding database operations in your company, we’ll also contrast it with conventional database management techniques and other options.