VMDK Provisioning Types

VMDK (Virtual Machine Disk) has been designed to mimic the operation of physical disk. Virtual disks are stored as one or more VMDK files on the host computer or remote storage device, and appear to the guest operating system as standard disk drives.

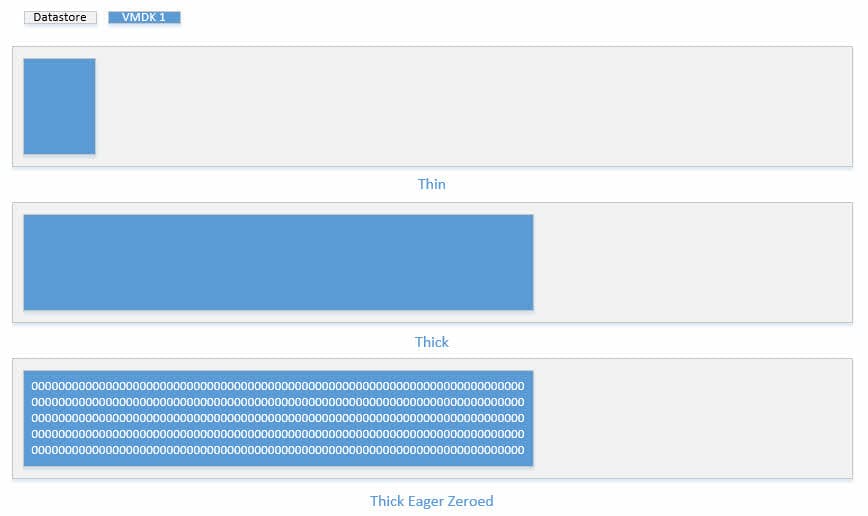

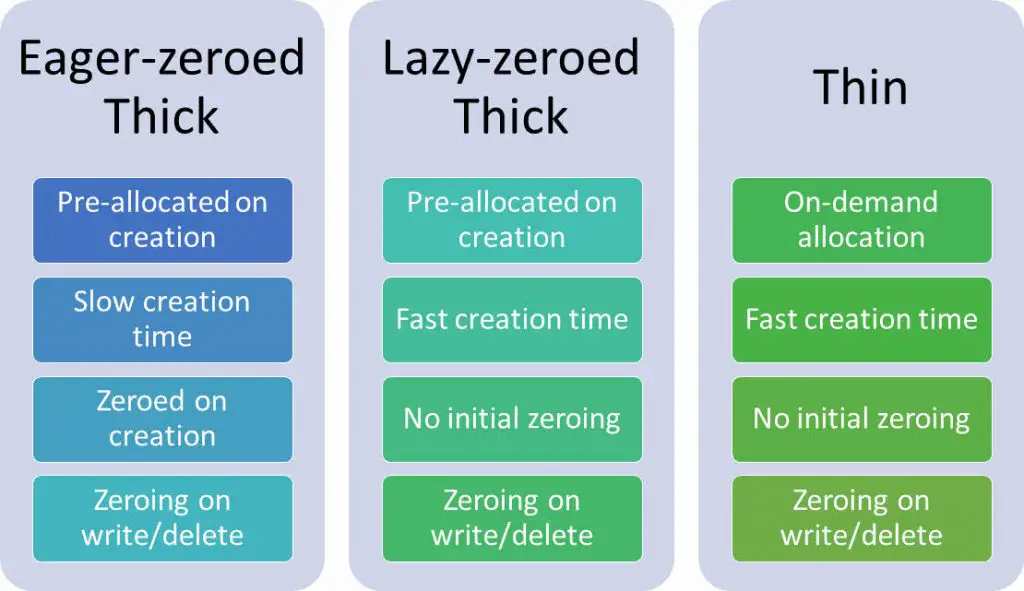

VMware supports three provisioning types:

- Thin Provisioned

- Thick Provisioned

- Eager-zeroed Thick Provisioned

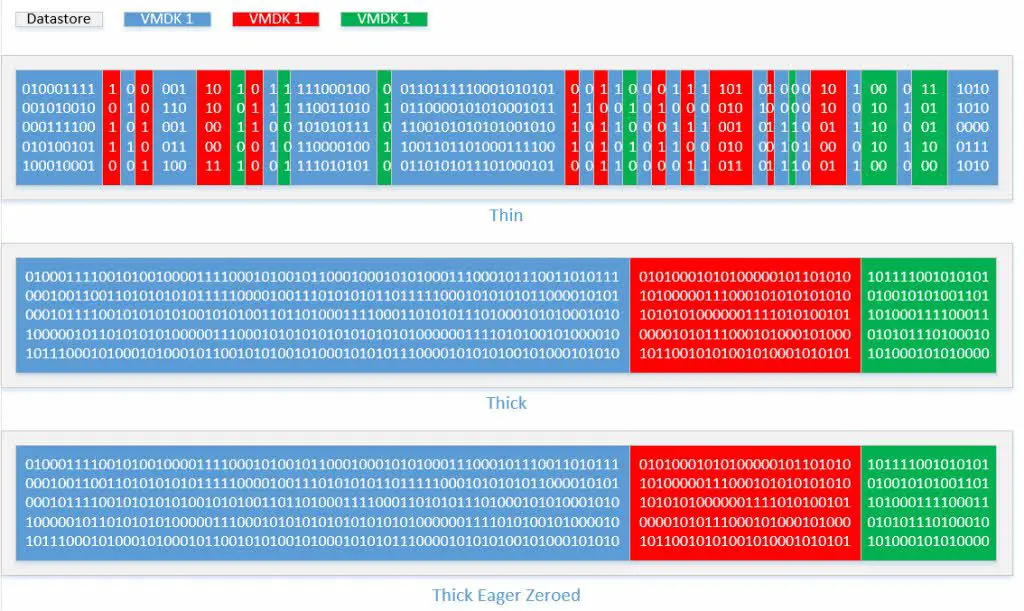

The primary distinction between the three provisioning types surrounds preparation of net new blocks when they are consumed for the first time by a VM. The three provisioning schemes have different default, day zero behavior:

| VMDK Provisioning Type | Blocks allocated on creation? | Blocks zeroed on creation? |

|---|---|---|

| Thin | N | N |

| Thick | Y | N |

| Eager-zeroed Thick | Y | Y |

In this context, “allocated” means that the space is marked as in-use by the VMFS filesystem. This guarantees that the blocks promised to the VMDK will be available, and prevents overprovisioning of storage resources. “Zeroed” means that the blocks are all prepared, erased and ready for consumption by the VM. These distinctions can cause difference in write IO performance, as different VMFS filesystem-level steps are required depending on provisioning type. When a VM issues a write to a virtual disk, the block being written may need to go through a preparation phase depending on allocation scheme.

All preparation in VMFS is done in 1MiB increments. This preparation only occurs when a new block is touched for the first time:

- New blocks on thin-provisioned VMDKs

- The space needed to satisfy the new block is allocated on the VMFS filesystem.

- The new block is zeroed by VMFS to ensure consistency.

- The new block is ready, and the VM’s write is processed. The payload is written to the new block.

- New blocks on thick-provisioned VMDKs

- The new block is zeroed by VMFS to ensure consistency

- The new block is ready, and the VM’s write is processed. The payload is written to the new block.

- New blocks on eager-zeroed thick-provisioned VMDKs

- Not applicable. All blocks are pre-allocated and pre-zeroed when eager-zeroing is used. The payload is written immediately, as there are no net-new blocks.

Different Provisioning Types, Different Write Performance

The preparation steps associated with thin and regular thick-provisioned VMDKs do add some overhead to write operations. During normal production, these are not meaningful and performance is similar across all provisioning types. During large-scale consumption of new blocks, however, performance distinctions may be observable to the end user. As the payload cannot be written until the block is prepared, the payload must wait until preparation is complete before it can be written. When large write operations are initiated by a guest OS, this will involve a large volume of block preparation activities and could result in observably lower performance on thin or regular thick-provisioned disks when performance is compared to eager-zeroed disks.

The degree of any observable performance differential will vary based on numerous factors. These include overall load level within the compute environment, load on the storage array, the storage array’s handling of zero data, and other factors. The overheads involved in thin and normal thick-provisioned VMDKs are modest, though at high volume they can become apparent due to these or numerous other factors. The primary external factor (beyond ESXi and VMFS behaviors) in write performance are specific storage array behaviors. Various storage platforms handle zero data differently (such as transparent page compression, thin volume/LUN zeroing behaviors, etc.) and this can cause changes in end-user performance within VMs. For additional information regarding best practices for provisioning schemes, array-specific handling of zero data or thin volume/LUN preparation or other topics, VMware recommends engagement with the applicable storage vendor for specific guidance.

In general, thin provisioned VMDKs are useful as they enable easier space management and result in less wasted space through vSphere advanced space-reclamation technologies both at the datastore level and within many guest operating systems when thin provisioning is used. Eagerzeroed thick provisioning is typically recommended only for highly latency-sensitive applications or where the allocation/zeroing process overhead should be avoided. This recommendation also may vary depending on array manufacturer recommendations.

See Also

[Review]: LPAR2RRD – Free Performance Monitoring

Using VisualEsxtop to troubleshoot performance issues in vSphere

Opvizor Performance Analyzer 3.0

Reference

Understanding large-scale sequential write performance in VMDKs residing on VMFS datastores (57254)