The open-source software defined storage (SDS) colossus, Ceph, has long been praised for its versatility, scalability, and strong feature set. But creating and maintaining a Ceph cluster, especially for small-scale deployments, may be a difficult undertaking best left to storage experts and experienced system administrators. Introducing MicroCeph, an opinionated and streamlined implementation of Ceph that seeks to enable everyone to use this potent storage solution.

Imagine being able to effortlessly manage a fully functional Ceph cluster with only a few finger snaps. That is MicroCeph’s magic. It uses Snaps, a safe and independent Linux packaging standard, to provide a pre-setup, pre-optimized Ceph experience. Forget about laborious orchestration, complicated daemons, and manual configuration; MicroCeph handles everything, from deployment to continuing management.

Key Benefits of MicroCeph

- Effortless Deployment: Gone are the days of poring over documentation and wrestling with intricate configurations. MicroCeph installs in a single command, automatically setting up and managing the Ceph cluster for you.

- Simplified Management: Say goodbye to complex monitoring and maintenance. MicroCeph provides a user-friendly web interface for managing your cluster, making even basic tasks like adding storage or monitoring health a breeze.

- Ideal for Small Deployments: Don’t let the “micro” moniker fool you. MicroCeph caters perfectly to small and medium-sized businesses, edge deployments, and development environments, offering the power of Ceph without the enterprise complexity.

- Ceph at its Core: While streamlined, MicroCeph doesn’t compromise on functionality. It utilizes the native Ceph daemons and protocols, ensuring compatibility with existing Ceph ecosystems and tools.

- Scalability with Ease: Start small and grow as your needs evolve. MicroCeph lets you seamlessly add nodes to your cluster, expanding storage capacity and performance without major disruptions.

- Secure and Reliable: Built on the rock-solid foundation of Ceph, MicroCeph inherits its robust distributed architecture, redundancy, and self-healing capabilities.

Under the Hood of MicroCeph

MicroCeph’s secret sauce lies in its opinionated approach. It streamlines the Ceph deployment by making certain default choices based on best practices for small-scale setups. This includes:

- Single-rack Deployments: It is designed for one-rack clusters, optimizing performance and simplifying network topology.

- Snap Packaging: The use of Snaps ensures consistent, self-contained installations and simplified updates.

- Dqlite for Configuration Management: A distributed SQLite database keeps track of cluster configuration and node information.

- Native Ceph Protocols: It supports all native Ceph protocols (RBD, CephFS, and RGW) for flexible storage options.

Use Cases for MicroCeph

The ease and efficiency of MicroCeph make it ideal for various scenarios:

- Development and Testing: Set up a dedicated Ceph environment for development and testing purposes, isolated from production.

- Edge Computing: Deploy a reliable and scalable storage solution for resource-constrained edge deployments.

- Private Cloud Storage: Build your own private cloud storage infrastructure with the power and flexibility of Ceph.

- Media & Entertainment Workflows: Manage large media files and collaborate on projects efficiently with high-performance storage.

- Backup and Disaster Recovery: Leverage Ceph’s redundancy and self-healing features for secure and reliable backups.

MicroCeph vs. Traditional Ceph Deployment

The table below highlights the key differences between MicroCeph and a traditional Ceph deployment:

| Feature | MicroCeph | Traditional Ceph Deployment |

|---|---|---|

| Complexity | Minimal | High |

| Deployment Time | Minutes | Hours/Days |

| Management Overhead | Low | High |

| Ideal for | Small-scale deployments, edge, development | Large-scale deployments, enterprise environments |

| Flexibility | Opinionated, focused on best practices | Highly customizable |

MicroCeph Architecture: Unpacking the Simplicity

As its name implies, it makes the vast and intricate world of Ceph more approachable for all users. However, how does it accomplish this? Let’s examine and analyze the various parts of the MicroCeph architecture.

Core Layers

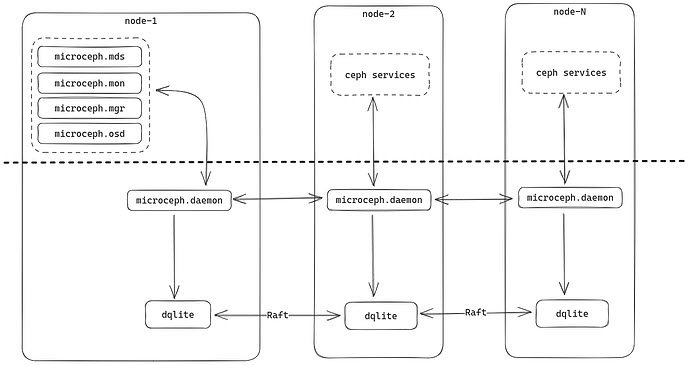

Its architecture rests on three core layers:

- Snap Layer: This forms the basis, providing isolation and consistency through self-contained Snaps. Imagine a pre-packaged Ceph environment delivered in a neat box, ready to deploy.

- Management Layer: This layer handles cluster configuration and orchestration. Think of it as the conductor, ensuring everything dances in harmony. The distributed Sqlite database (Dqlite) acts as the score sheet, keeping track of nodes, disks, and service placement.

- Ceph Daemons: The heart of the matter, these are the native Ceph daemons like Monitors, OSDs (Object Storage Daemons), and MDS (Metadata Server). They handle data placement, replication, and access, powering the storage magic.

Components in Action

Imagine we’re setting up a cluster. Here’s what happens:

- Snap Installation: You install the MicroCeph Snap on each node. This automatically deploys and configures the Ceph daemons based on best practices for small-scale setups.

- Cluster Initialization: One node is designated as the initial monitor, and you run

microceph initto kick things off. This registers the node in Dqlite and sets up the basic cluster structure. - Node Joining: Subsequent nodes run

microceph join, automatically discovering the existing cluster and registering themselves in Dqlite. The management layer balances services across nodes for optimal performance. - Storage Access: You use standard Ceph clients (RBD, CephFS, RGW) to access the storage pool created by MicroCeph. Data is efficiently distributed and replicated across the cluster for redundancy and resilience.

Key Architectural Points

- Single-rack Focus: It optimizes for one-rack deployments, simplifying network topology and maximizing performance for small-scale environments.

- Native Ceph Protocols: While streamlined, it stays true to Ceph’s core by using native protocols and interfaces, ensuring compatibility with existing tools and ecosystems.

- Opinionated Design: That makes certain choices for you based on best practices, eliminating the need for complex configuration decisions. This streamlines deployment and management.

- Scalability on Demand: Start small with a few nodes and easily scale your cluster by adding more Snaps as your storage needs grow.

To better understand the interplay of components, here’s a simplified diagram of the architecture:

As you can see, MicroCeph orchestrates a seamless interplay between the Snap layer, Management layer, and native Ceph daemons, delivering a simplified yet powerful storage solution.

Its architecture strikes a balance between ease of use and functionality. It leverages Snaps, automates tasks, and makes strategic choices to provide a simplified Ceph experience without sacrificing core capabilities.

Diving into MicroCeph: A Step-by-Step Installation Guide

The powerful Ceph storage system has been simplified into MicroCeph, which is meant to free you from complicated setups and administrative hassles. But how do you really start this streamlined beast up? You don’t need to worry; this guide will carefully walk you through the installation process step by step.

Prerequisites:

Before embarking on this storage adventure, ensure you have the following ingredients:

- Linux machines: At least one node is required, with additional nodes for scaling your storage pool. Each node should have sufficient storage space and adequate network connectivity.

- Snapd: This software package manages Snaps, self-contained bundles of applications and their dependencies. Make sure it’s installed and running on all your nodes.

- Terminal access: You’ll be wielding the power of the command line in this journey.

Step 1: Install the MicroCeph Snap

Open your terminal and type the following command on each node:

sudo snap install microceph --channel quincy/edge

This installs the latest MicroCeph Snap with the quincy/edge channel for cutting-edge features. Alternatively, you can stick with the stable channel with:

sudo snap install microceph --channel latest/stable

Once installed, refresh the Snap to ensure you have the latest updates:

sudo snap refresh --hold microceph

Step 2: Initialize the Cluster

Choose one node to be the initial monitor. On that node, run the following command to kickstart the cluster:

sudo microceph cluster bootstrap

This sets up the basic cluster structure and registers the node in the distributed SQLite database (Dqlite) that MicroCeph uses for configuration management.

Step 3: Join Additional Nodes (Optional)

If you have more nodes ready to join the party, run the following command on each additional node:

sudo microceph join [cluster-ip]

Replace [cluster-ip] with the IP address of the node that ran the microceph cluster bootstrap command in step 2. This command discovers the existing cluster and registers the joining node in Dqlite.

Step 4: Verify the Cluster Status

To confirm that everything is running smoothly, run the following command on any node:

sudo ceph status

This displays the health and status of your Ceph cluster, including information about monitors, OSDs (Object Storage Daemons), and the overall storage pool.

Step 5: Access the Storage Pool

Now that your MicroCeph cluster is humming along, it’s time to put it to work! Use the appropriate Ceph client (RBD, CephFS, RGW) to interact with the storage pool. Here are some examples:

- RBD (block device): Create a block device and mount it in your filesystem:

sudo ceph rbd create my-block

sudo ceph rbd mount my-block /mnt/my-block

- CephFS (distributed filesystem): Mount the CephFS filesystem:

sudo ceph fs mount my-fs /mnt/my-fs

- RGW (object storage gateway): Access the object storage through a web interface or API.

Congratulations! You’ve successfully installed and configured your cluster. Remember, this is just the beginning. You can now explore the vast universe of Ceph features and functionalities, all wrapped in the user-friendly MicroCeph package.

Bonus Tips:

- For detailed instructions and troubleshooting tips, refer to the official documentation: https://github.com/canonical/microceph

- The community forum is a great resource for questions and discussions: https://discourse.ubuntu.com/c/desktop/8

- Experiment with different storage configurations and services to tailor MicroCeph to your specific needs.

With MicroCeph by your side, conquering the world of scalable storage just became a whole lot easier. So, go forth and unleash the power of your very own Ceph cluster!

Additional Notes:

- This guide provides a general overview of the installation process. Specific commands and configurations may vary depending on your individual setup and desired features.

- Remember to adjust the commands and instructions based on your chosen Snap channel (stable vs. edge).

- Feel free to explore the plethora of online resources and tutorials available for MicroCeph and Ceph. The community is vibrant and eager to help you on your storage journey.

Conclusion

In summary, MicroCeph is more than just a streamlined Ceph deployment; it’s an open door to the world of potent, scalable storage. We’ve examined its architecture, broken down the installation procedure, and offered resources to help you set up your own cluster.

Recall that the simplicity of MicroCeph’s deployment and administration is what makes it so strong. Therefore, don’t be put off by Ceph’s complexity; MicroCeph lowers the barriers and extends an invitation for you to personally experience its dependability and flexibility. Explore its features in further detail, customize it to meet your needs, and interact with the active user and development community.

The journey into the world of Ceph might have just begun, but with MicroCeph as your guide, it’s a journey packed with possibilities. Go forth, unleash the power of scalable storage, and watch your digital universe flourish!

Further Exploration:

- Explore the documentation for detailed configuration options and advanced features.

- Join the community forum to connect with other users and experts, share best practices, and get your questions answered.

- Discover the vast ecosystem of tools and applications built on top of Ceph, expanding the possibilities of your storage solution.

As you navigate the world of Ceph, remember, knowledge is power. Be a student, an explorer, and an experimenter. It provides the foundation – the rest is up to your imagination and desire to unlock the potential of powerful and accessible storage.

Further Reading

What’s SDS (Software-Defined Storage) – Part 1 (Overview)

What’s SDS (Software-Defined Storage) – Part 2 (Ceph)

Dell EMC ECS: The Best Modern Object Storage Platform for Data-Driven Innovation

LINBIT (DRBD, LINSTOR): One of The Best Software Defined Storage Solutions

External Links

MicroCeph documentation (readthedocs-hosted.com)

Cloud storage at the edge with MicroCeph | Ubuntu

GitHub – canonical/microceph: Ceph for a one-rack cluster and appliances