Ceph is most popular software-defined storage platform and offers Objec-Storage, Block Device and File System (CephFS) for storing data in modern data centers or public and private clouds. Best and popular solutions needs alternatives as well. So at this post, we’ll review some alternatives for Ceph.

Ceph Storage Platform Alternatives List

- MinIO

- BeeGFS

- GlusterFS

- LizardFS

- XtreemFS

- Quobyte

- StorPool

- OpenIO

Before our review, I have to mentioned that each alternative has own abilities and you should choose the alternative according to your requirements and use cases.

MinIO

MinIO supports the widest range of use cases across the largest number of environments. Cloud native since inception, MinIO’s software-defined suite runs seamlessly in the public cloud, private cloud and at the edge – making it a leader in the hybrid cloud and multi-cloud object storage. With industry leading performance and scalability, MinIO can deliver a range of use cases from AI/ML, analytics, backup/restore and modern web and mobile apps.

Hybrid and Multi-Cloud

MinIO is a natural fit for enterprises looking for a consistent, performant and scalable object store for their hybrid cloud strategies. Kubernetes-native by design, S3 compatible from inception, MinIO has more than 7.7M instances running in AWS, Azure and GCP today – more than the rest of the private cloud combined. When added to millions of private cloud instances and extensive edge deployments – MinIO is the hybrid cloud leader.

Born cloud native

MinIO was built from scratch in the last four years and is native to the technologies and architectures that define the cloud. These include containerization, orchestration with Kubernetes, microservices and multi-tenancy. No other object store is more Kubernetes-friendly.

MinIO is pioneering high performance object storage

MinIO is the world’s fastest object storage server. With READ/WRITE speeds of 183 GiB/s and 171 GiB/s on standard hardware, object storage can operate as the primary storage tier for a diverse set of workloads ranging from Spark, Presto, TensorFlow, H2O.ai as well as a replacement for Hadoop HDFS.

Built on the principles of web scale

MinIO leverages the hard won knowledge of the web scalers to bring a simple scaling model to object storage. At MinIO, scaling starts with a single cluster which can be federated with other MinIO clusters to create a global namespace, spanning multiple data centers if needed. It is one of the reasons that more than half the Fortune 500 runs MinIO.

MinIO is 100% open source under the Affero General Public License Version 3 (AGPLv3). This means that MinIO’s customers are free from lock in, free to inspect, free to innovate, free to modify and free to redistribute. The diversity of its deployments have hardened the software in ways that proprietary software can never offer.

BeeGFS

BeeGFS is a hardware-independent POSIX parallel file system (a.k.a Software-defined Parallel Storage) developed with a strong focus on performance and designed for ease of use, simple installation, and management. BeeGFS is created on an Available Source development model (source code is publicly available), offering a self-supported Community Edition and a fully supported Enterprise Edition with additional features and functionalities. BeeGFS is designed for all performance-oriented environments including HPC, AI and Deep Learning, Media & Entertainment, Life Sciences, and Oil & Gas (to name a few).

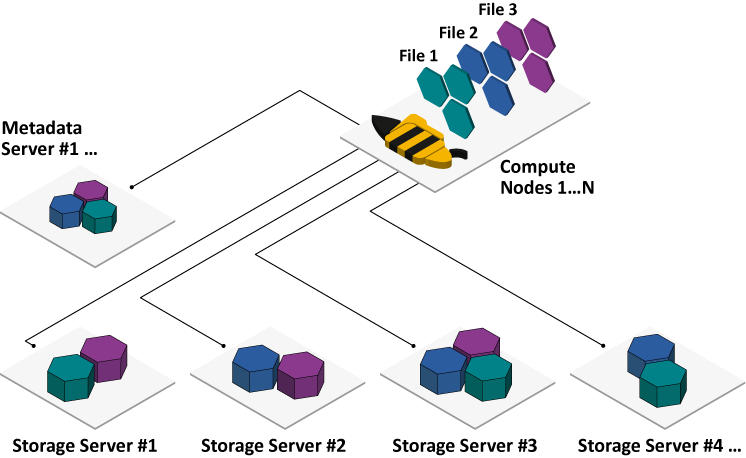

BeeGFS transparently spreads user data across multiple servers. By increasing the number of servers and disks in the system, you can simply scale performance and capacity of the file system to the level that you need, seamlessly from small clusters up to enterprise-class systems with thousands of nodes.

Distributed File Contents and Metadata

One of the most fundamental advantages of BeeGFS is the strict avoidance of architectural bottlenecks or locking situations in the cluster, through the user space architecture.

This concept allows to scale non-disruptive and linear on metadata & the storage level.

HPC Technologies

BeeGFS is built on highly efficient and scalable multithreaded core components with native RDMA support. File system nodes can serve RDMA (InfiniBand, (Omni-Path), RoCE and TCP/IP) network connections at the same time and automatically switch to a redundant connection path in case any of them fails.

Easy to Use

BeeGFS requires no kernel patches (the client is a patchless kernel module, the server components are user space daemons), it comes with graphical Grafana dashboards and allows to add more clients and servers to a productive system whenever required.

Optimized for Highly Concurrent Access

Simple remote file systems like NFS do not only have serious performance problems in case of highly concurrent access, they can even corrupt data when multiple clients write to the same shared file, which is a typical use-case for cluster applications. BeeGFS was specifically designed with such use-cases in mind to deliver optimal robustness and performance in situations of any high I/O loads or pattern.

Client and Server on any Machine

No specific enterprise Linux distribution or other special environment is required to run BeeGFS. It uses existing partitions, formatted with any of the standard Linux file systems, e.g., XFS, ext4 or ZFS, which allows different use cases.

GlusterFS

Gluster is a scalable, distributed file system that aggregates disk storage resources from multiple servers into a single global namespace.

Advantages

- Scales to several petabytes

- Handles thousands of clients

- POSIX compatible

- Uses commodity hardware

- Can use any ondisk filesystem that supports extended attributes

- Accessible using industry standard protocols like NFS and SMB

- Provides replication, quotas, geo-replication, snapshots and bitrot detection

- Allows optimization for different workloads

- Open Source

LizardFS

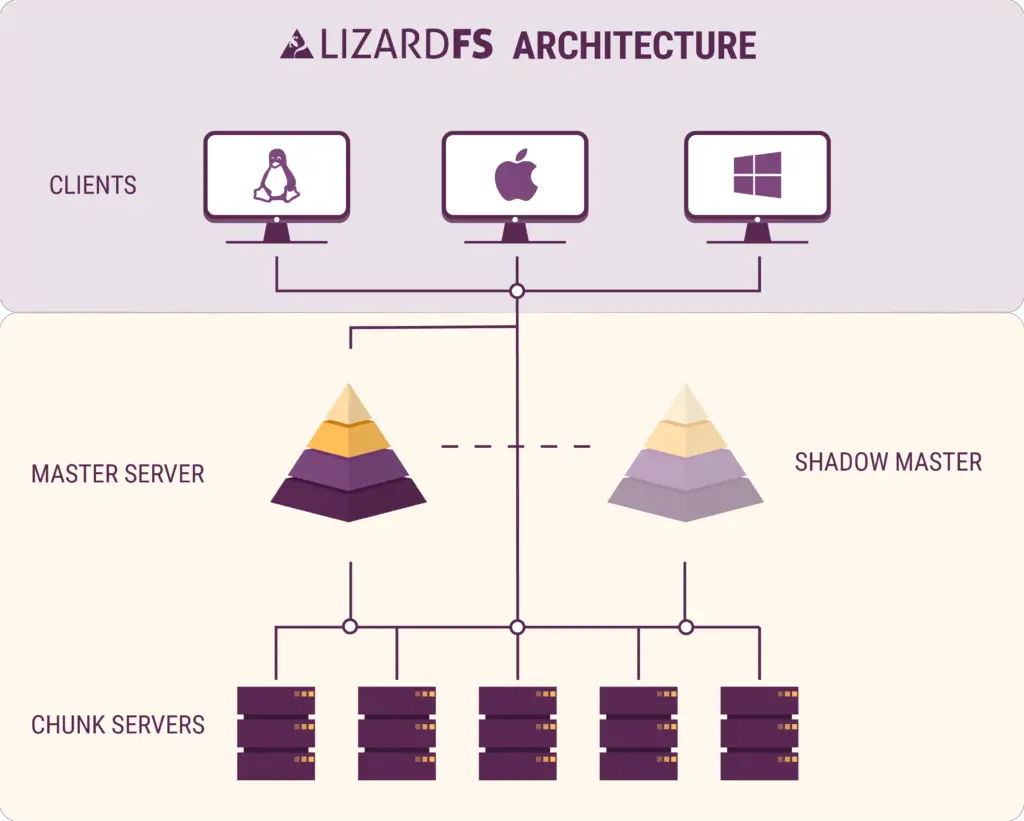

LizardFS, Software Defined Storage is a distributed, scalable, fault-tolerant, and highly available file system. It allows combining disk space located on several servers into a single namespace visible on Unix-like and Windows systems in the same way as other file systems. LizardFS was inspired by the GoogleFS distributed file system that was introduced in 2010.

LizardFS keeps metadata and the data separately. Metadata is kept on metadata servers, while data is kept on chunkservers. Check up a typical installation on the scheme.

LizardFS makes files secure by keeping all the data in many replicas spread over all available servers. It can also be used to build affordable storage as it runs perfectly on commodity hardware. Disk and server failures are handled transparently without any downtime or data loss.

When storage requirements grow, you can scale up LizardFS installation just by adding new servers – at any time, without any downtime. Data chunks will be automatically moved to the new servers, as it is continuously balancing disk usage across all connected nodes.

Removing a server is just as simple and easy as adding a new one.

XtreemFS

XtreemFS has no update during 5 years, so you can use Quobyte. The original XtreemFS team founded Quobyte Inc. in 2013.

Quobyte

Three key aspects of Quobyte software distinguish it from other offerings. No. 1 is its linear scaling. Doubling the node count doubles the performance, it’s as simple as that. No. 2 is unified storage, which allows multiple users to simultaneously work on the same file regardless of the access protocol being used. No. 3 is the system’s ability to monitor, maintain, and heal itself, permitting small teams to start with a few terabytes and grow to manage tens to hundreds of petabytes while continuing to deliver data despite hardware failures.

Key Features

- Software-only solution (zero hardware or kernel dependencies maximize compatibility)

- Self-monitoring and self-healing (manage large clusters with minimal staff)

- High IOPs, low-latency client (also provides seamless failover)

- Policy-based data management and placement

- Volume Mirroring (perfect for disaster recovery, or as a backup source)

- Runtime reconfigurable (eliminates downtime, changes on the fly)

- Simple, scripted cluster installation (up and running in less than an hour)

- Compatible with any modern Linux distro

- Supports POSIX, NFS, S3, SMB, and Hadoop file access methods (one platform for all workloads)

- Works with HDDs, SSDs, and NVMe devices (no need to buy expensive flash when it’s not needed)

StorPool

StorPool’s enterprise data storage solution enables so-called “converged” deployments, i.e. using the same servers for both storage and computation, therefore making it possible to have a single standard “building block” for the datacenter and slashing costs.

An extension to this concept is having a tight integration of the software layers running on this converged infrastructure – hypervisor, cloud management, etc – known as “hyper-convergence” or “hyper-converged” infrastructure.

OpenIO

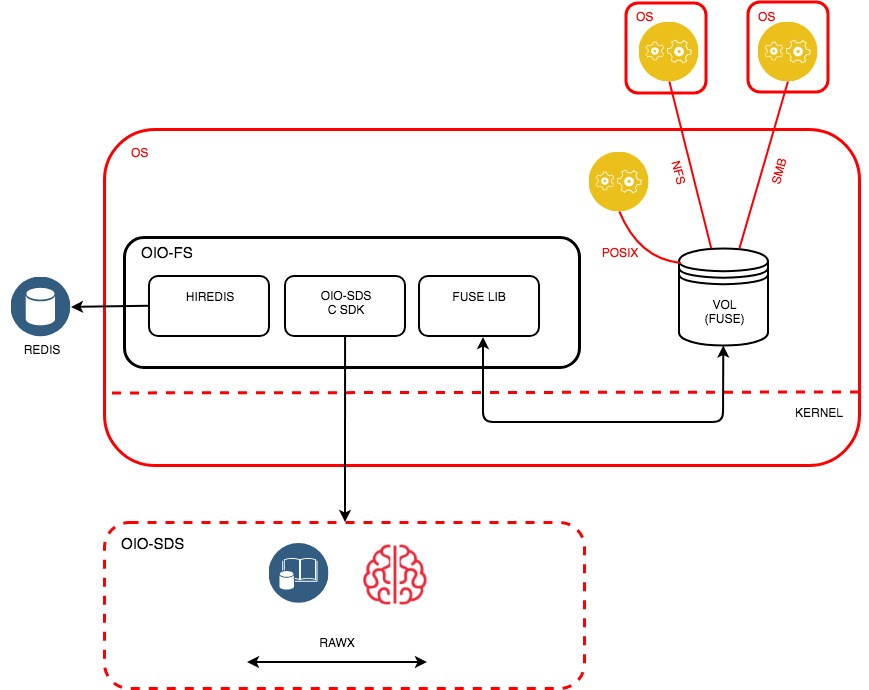

More than yet another object store, OpenIO blends efficiency, hyper-scalability and consistent high performance with the benefits usually found on other platforms. OpenIO is the best S3-compatible storage for large-scale infrastructures and big data workloads.

- Hyper-scalable object storage: Scale seamlessly from Terabytes to Exabytes. Simply add nodes to expand capacity, without rebalancing data, and watch as performance increases linearly.

- Designed for high performance: Transfer data at 1 Tbps and beyond. Experience consistent high performance, even during scaling operations. Ideal for capacity-intensive and challenging workloads.

- Hardware agnostic, lightweight solution: Use servers and storage media that suit your evolving needs. Avoid vendor lock-in. You can combine heterogenous hardware at any time, of different specs, generations, and capacities.

Conclusion

Open-Source software platforms are not free but you can use them as community edition or with limited features. The above storage platforms have same goals but also have some different abilities and capabilities, so choosing or using them is depended to your requirements and budget. About Ceph, I think that Ceph is still the best and there is no limitation for community edition but keep some other as alternative because may be Ceph can not meet all your requirements.

Further Reading

What’s SDS (Software-Defined Storage) – Part 2 (Ceph)

External Links

Ceph – Home Page

MinIO – Home Page

Quobyte – Solution Briefs

LizardFS – Home Page

BeeGFS – Home Page

GlusterFS – Administration Guide

OpenIO – Product Overview